Evaluating the Reliability of Large Language Models in Identifying Psychological Constructs in Steam Reviews

Dang Khoa Nguyen

Supervisor: Dr Cody Phillips

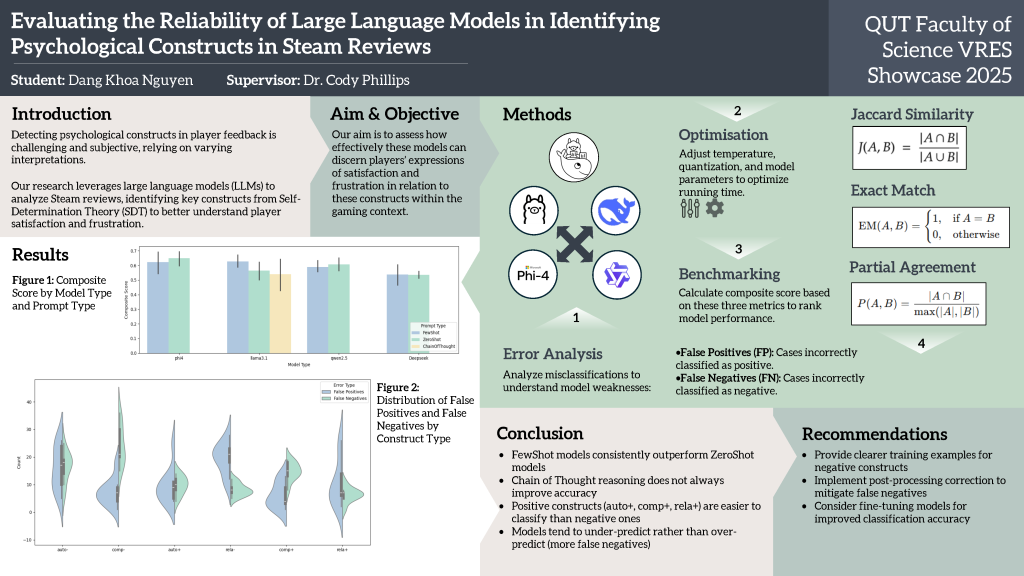

In this study, we explore the potential of large language models (LLMs) as tools for games user research by analysing Steam reviews to detect key psychological constructs defined in Self-Determination Theory (SDT): competence, autonomy, and relatedness. Our aim is to assess how effectively these models can discern players’ expressions of satisfaction and frustration in relation to these constructs within the gaming context.

To achieve this, we developed a controlled testing framework using Docker containers and CUDA-enabled GPUs, ensuring efficient computation and reproducibility. We experimented with a range of configurations—adjusting model parameters, quantisation levels, and temperature settings—to optimise detection accuracy. Furthermore, we explored various prompting techniques, including zero-shot, few-shot, and chain-of-thought strategies, to determine their impact on the model’s ability to identify nuanced psychological signals in user-generated content. A comparative analysis was also conducted with other models via the Ollama platform to benchmark performance.

Our findings indicate that while LLMs are proficient at capturing general sentiment, they face challenges in differentiating the subtle aspects of competence, autonomy, and relatedness. Notably, techniques such as chain-of-thought prompting provided moderate improvements, yet the models still struggled to reliably distinguish between closely related constructs. This suggests that the sophistication required for accurately parsing psychological nuances in game reviews may exceed the current capabilities of standard LLM implementations.

This work contributes to the emerging field of games user research by highlighting both the promise and limitations of LLMs in extracting meaningful psychological insights from player feedback. While our results underscore the potential of these models for advancing research into player motivation and behaviour, they also call for further refinement and domain-specific training to enhance their reliability. Future work in this area could pave the way for more sophisticated, data-driven approaches to understanding player experiences in gaming environments.

Media Attributions

- Evaluating the reliability of large language models in identifying psychological constructs in steam reviews © Dang Khoa Nguyen is licensed under a CC BY-NC (Attribution NonCommercial) license